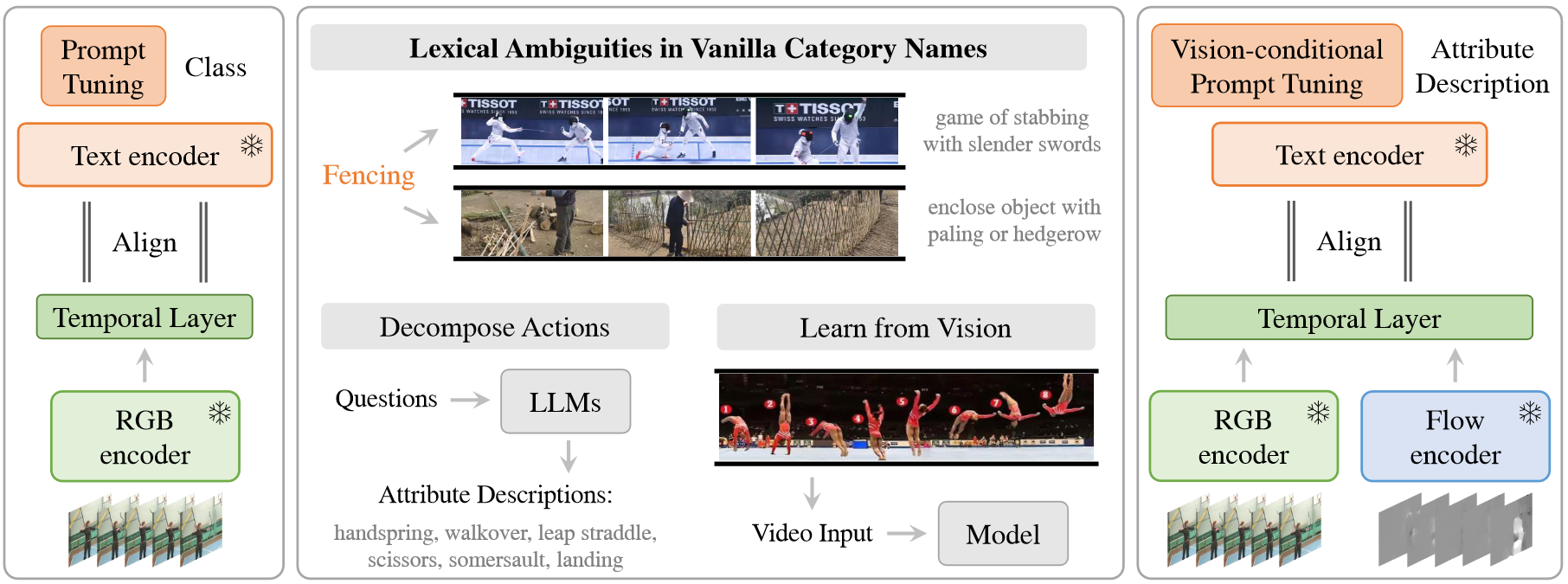

Left: Existing methods add prompt tuning and temporal layer to foundation models. Middle: The main challenge is lexical ambiguities in vanilla category names. To disambiguate text-based category names, we decompose actions by prompting large language models for various action attribute descriptions. For cases where it may be difficult to give comprehensive detail descriptions, we further propose vision-conditional prompting to learn from input videos. Right: Our overall framework.

| In this paper, we consider the problem of temporal action localization under low-shot (zero-shot & few-shot) scenario, with the goal of detecting and classifying the action instances from arbitrary categories within some untrimmed videos, even not seen at training time. We adopt a Transformer-based two-stage action localization architecture with class-agnostic action proposal, followed by open-vocabulary classification. We make the following contributions. First, to compensate image-text foundation models with temporal motions, we improve category-agnostic action proposal by explicitly aligning embeddings of optical flows, RGB and texts, which has largely been ignored in existing lowshot methods. Second, to improve open-vocabulary action classification, we construct classifiers with strong discriminative power, i.e., avoid lexical ambiguities. To be specific, we propose to prompt the pre-trained CLIP text encoder either with detailed action descriptions (acquired from large-scale language models), or visuallyconditioned instance-specific prompt vectors. Third, we conduct thorough experiments and ablation studies on THUMOS14 and ActivityNet1.3, demonstrating the superior performance of our model, significantly outperforming existing state-of-the-art methods. |

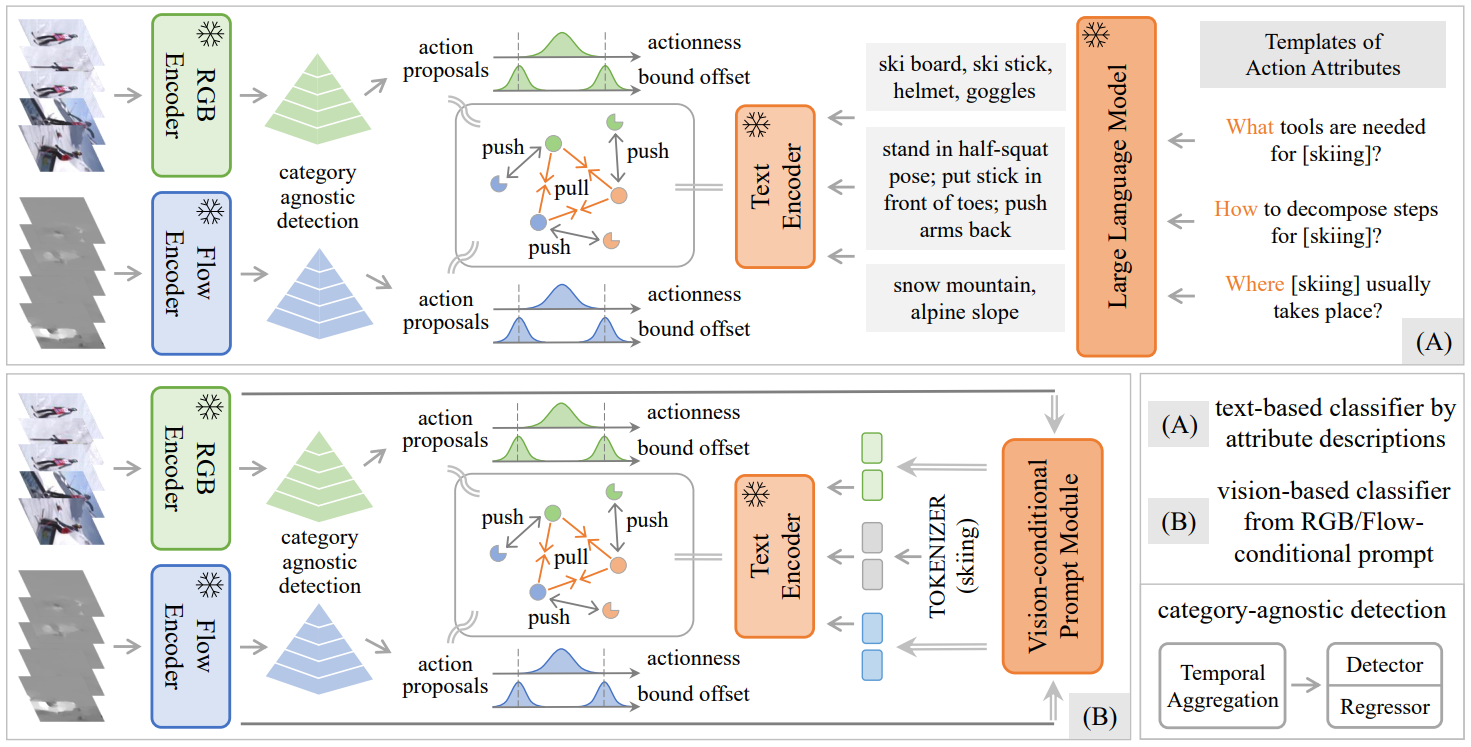

Framework Overview

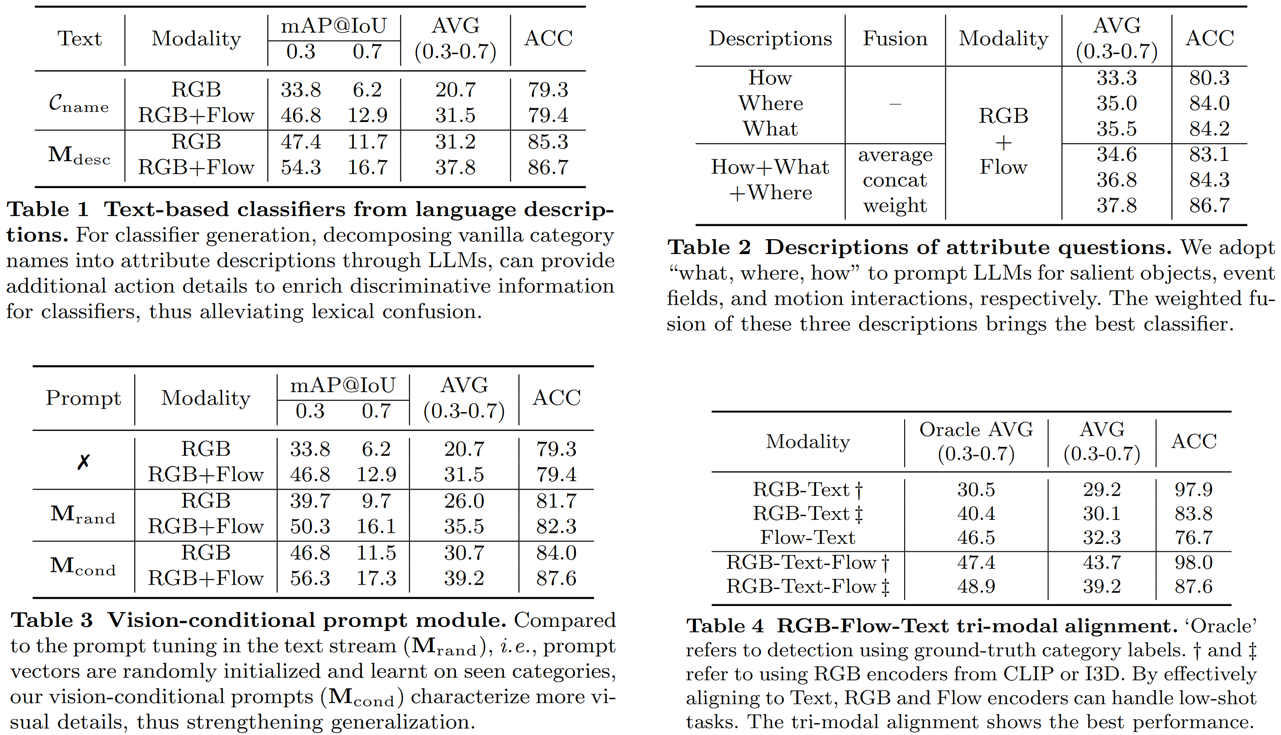

Given one input video, we first encode RGB and Flow for appearance and motion embeddings, then localize category-agnostic action proposals (detect actionness and regress boundary). For open-vocabulary classification, we design (A) text-based classifiers using attribute descriptions from Large Language Models, and (B) vision-based classifiers using (RGB & Flow)-conditional prompt tuning. Finally, the RGB-Flow-Text modalities are aligned for low-shot TAL.

Ablation Studies

Zero-shot Temporal Action localization

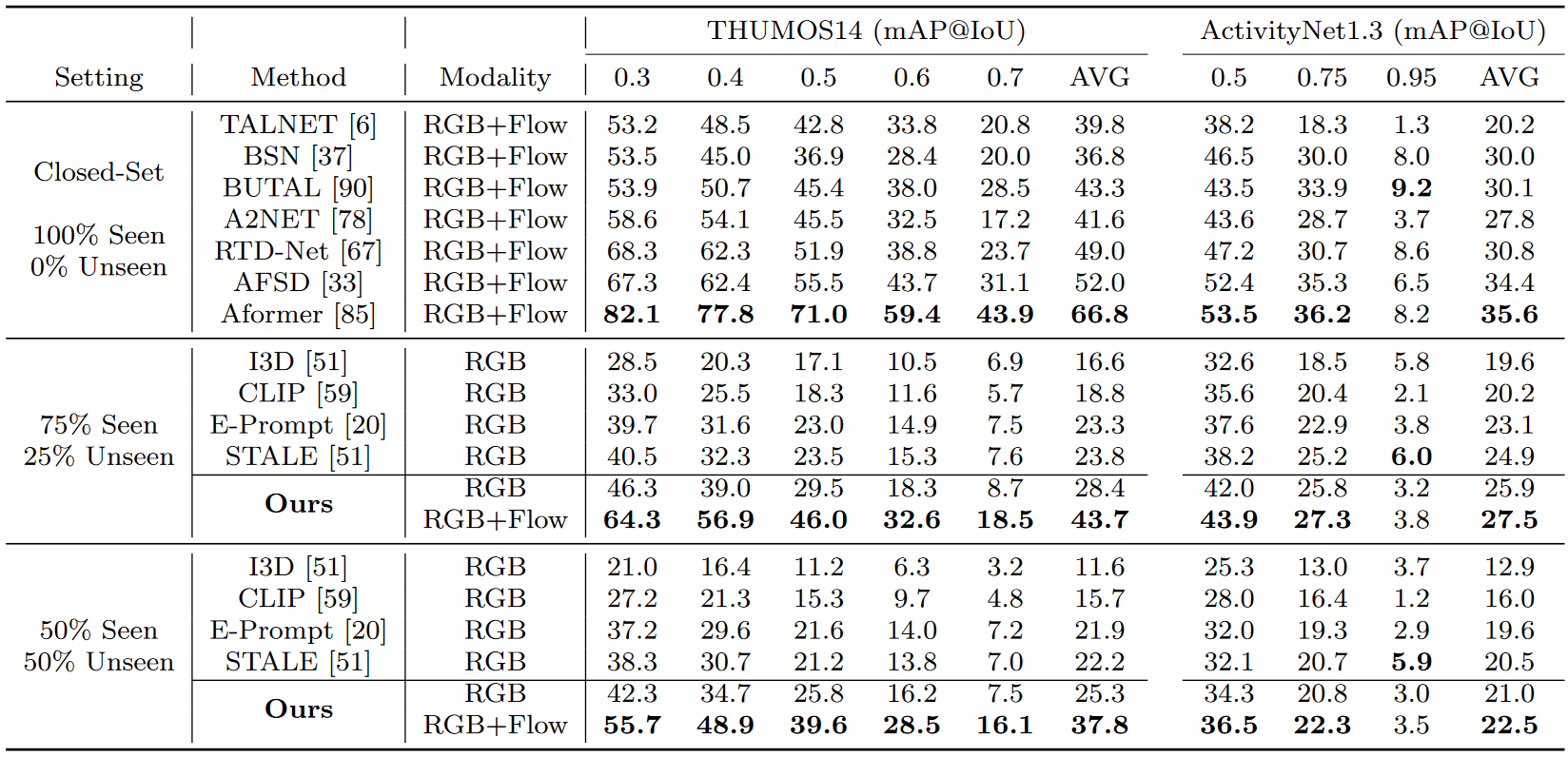

Comparison with state-of-the-art methods. AVG is the average mAP in [0.3:0.1:0.7] on THUMOS14, and [0.5:0.05:0.95] on ActivityNet1.3. Using only RGB, our method has outperformed all zero-shot studies by a large margin. By adding Flow, our method is given explicit motion inputs to be comparable with early closed-set methods.

Few-shot Temporal Action localization

Comparison with state-of-the-art methods. We retrain and report few-shot results of E-Prompt with their released codes. Although only several support samples are given for novel categories, few-shot scenarios obtain considerable gains over zero-shot scenarios. Flow can further boost the RGB performance on both datasets.

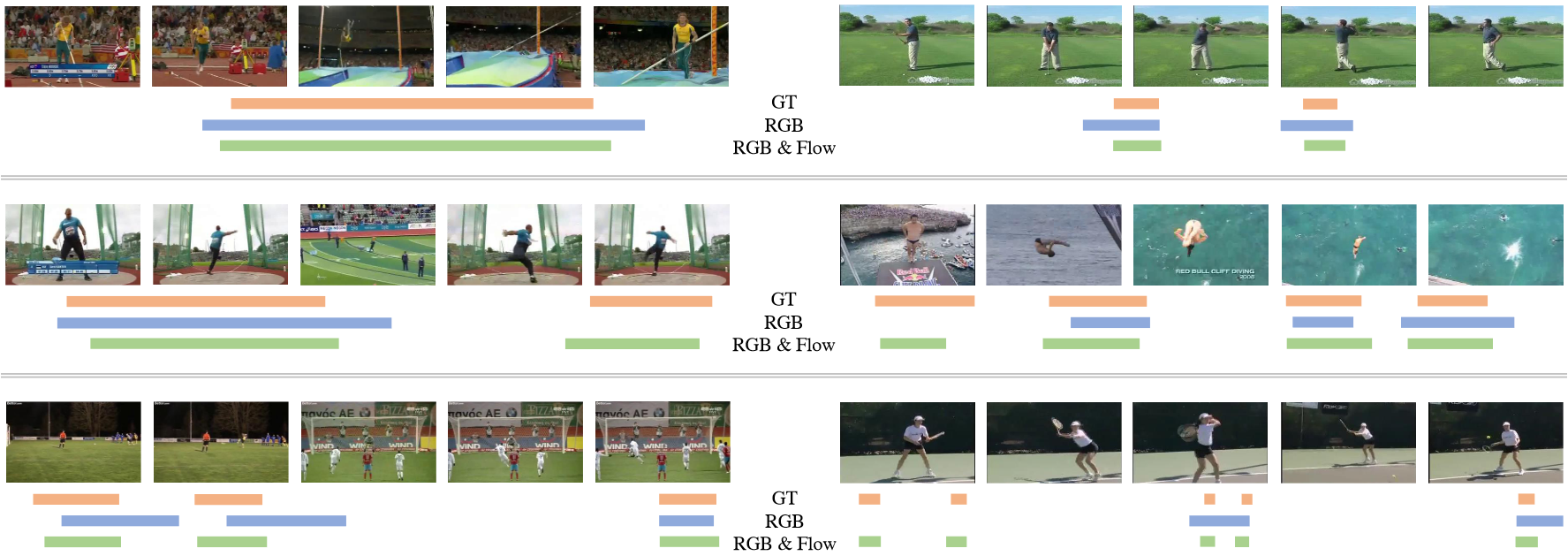

Qualitative Detection Results

Qualitative zero-shot results on THUMOS14. For various videos from novel categories, our method all outputs good detection results, although action number and action duration vary frequently. Single RGB sometimes has large deviations, or even omits action instances. By bringing motion details, Flow could further correct or complete the RGB results.

Dataset Splits

Under the few-shot scenario, we initiate several dataset splits for training and testing, please check here for more details.

Acknowledgements

This research is supported by the National Key R&D Program of China (No. 2022ZD0160702), STCSM (No. 22511106101, No. 18DZ2270700, No. 21DZ1100100), 111 plan (No. BP0719010), and State Key Laboratory of UHD Video and Audio Production and Presentation. Weidi Xie would like to acknowledge the generous support of Yimeng Long and Yike Xie.